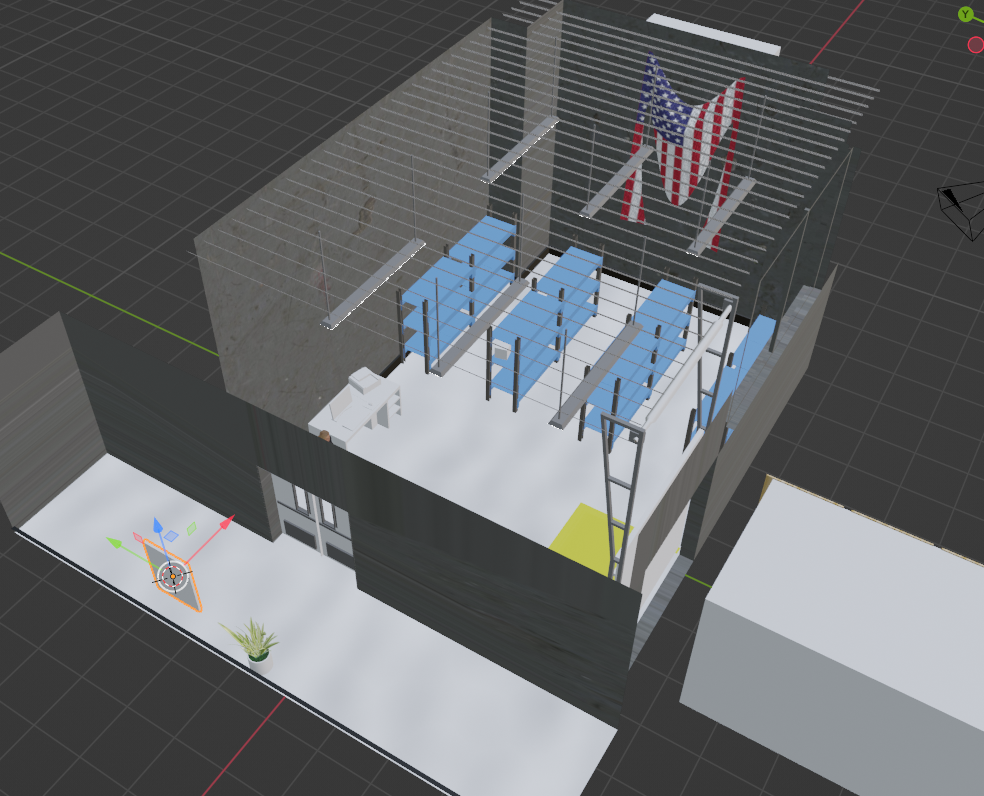

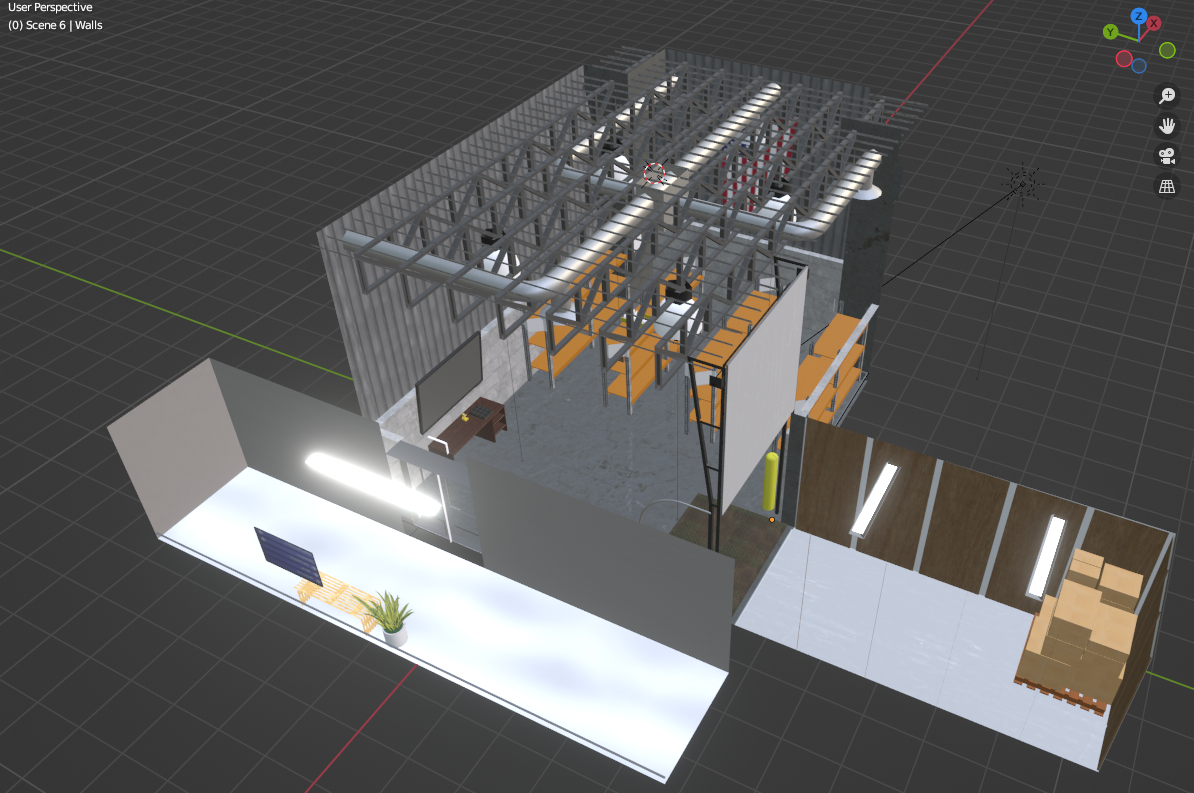

Screenshot of the final warehouse model in Blender

concept

VR's come a long way, even since I first tried it in 2016. I've used the Valve Index over the last year and only recently picked up a Meta Quest 2. Trying out wireless VR and the impressive capability of the headset really made me want to take a swing at VR development.

Ellumen works in training and after hearing about companies like StriVR creating VR training for Walmart, I proposed we do the same. My boss, Mary Caroll gave me the go-ahead and I began development.

process

To create a realistic demo, I worked with one of the Ellumen trainers (Jeremy Wiley) to find relevant tasks that would work well in VR. Eventually, we'd settled on some basic inventory duties that would give participants experience navigating and manipulating objects in the virtual world.

To create the experience, I knew I'd make the models in Blender and then assemble the scene in either Unreal Engine or Unity. I'd used Unity briefly college and found the VR community to be larger for that engine. Further, a friend suggested I look into the VRIF from Bearded Ninja Games. That Unity plugin streamlined the basic functionality and allowed me to rapidly get something in front of stakeholders!

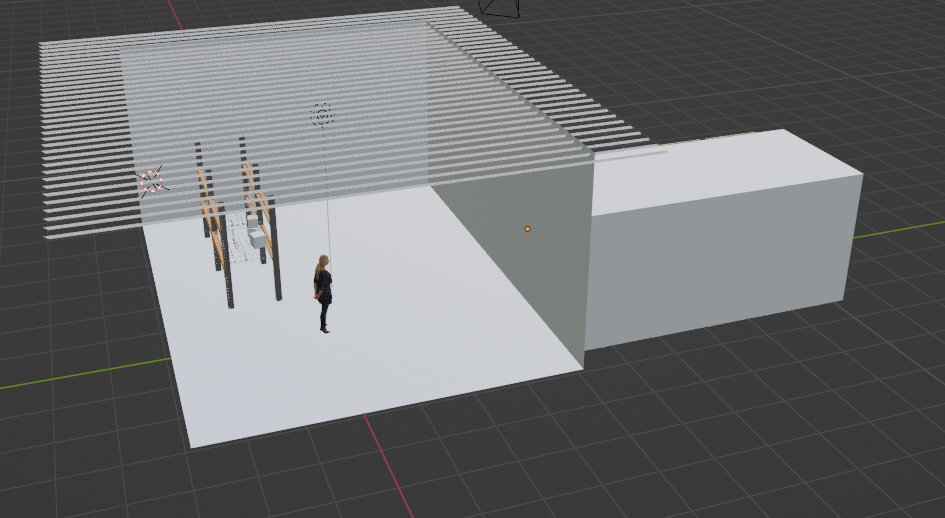

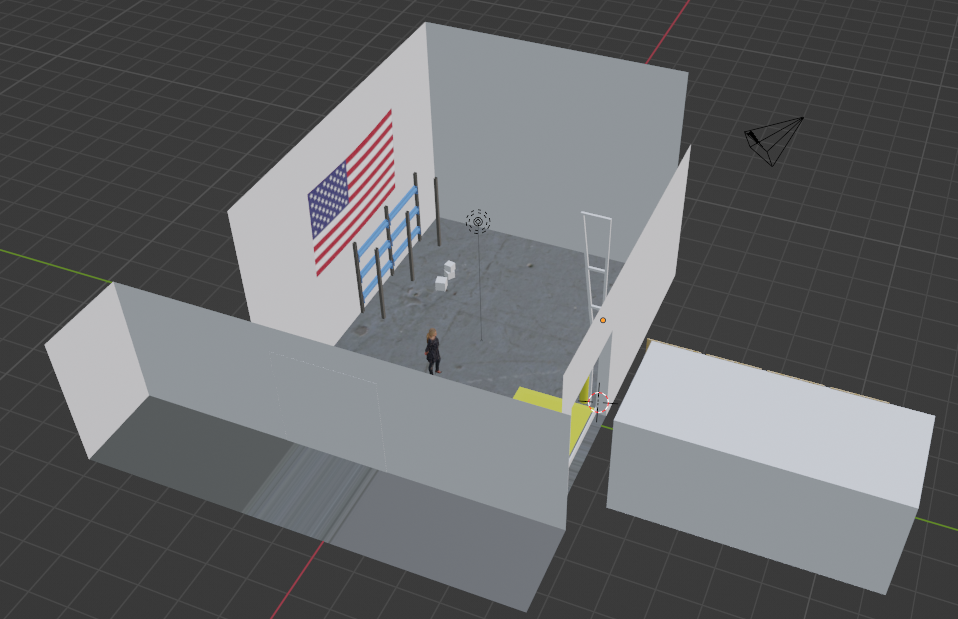

With the technology settled, I began creating the models and scenes in Blender.

To create the experience, I knew I'd make the models in Blender and then assemble the scene in either Unreal Engine or Unity. I'd used Unity briefly college and found the VR community to be larger for that engine. Further, a friend suggested I look into the VRIF from Bearded Ninja Games. That Unity plugin streamlined the basic functionality and allowed me to rapidly get something in front of stakeholders!

With the technology settled, I began creating the models and scenes in Blender.

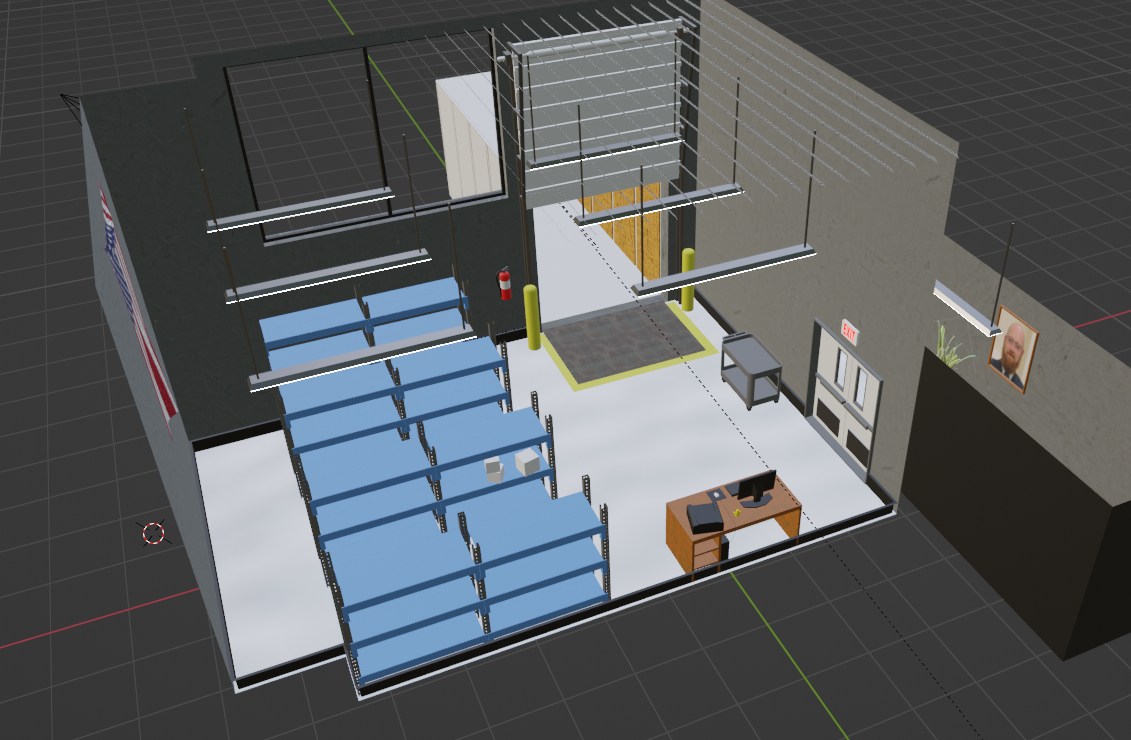

Blocking out the scene

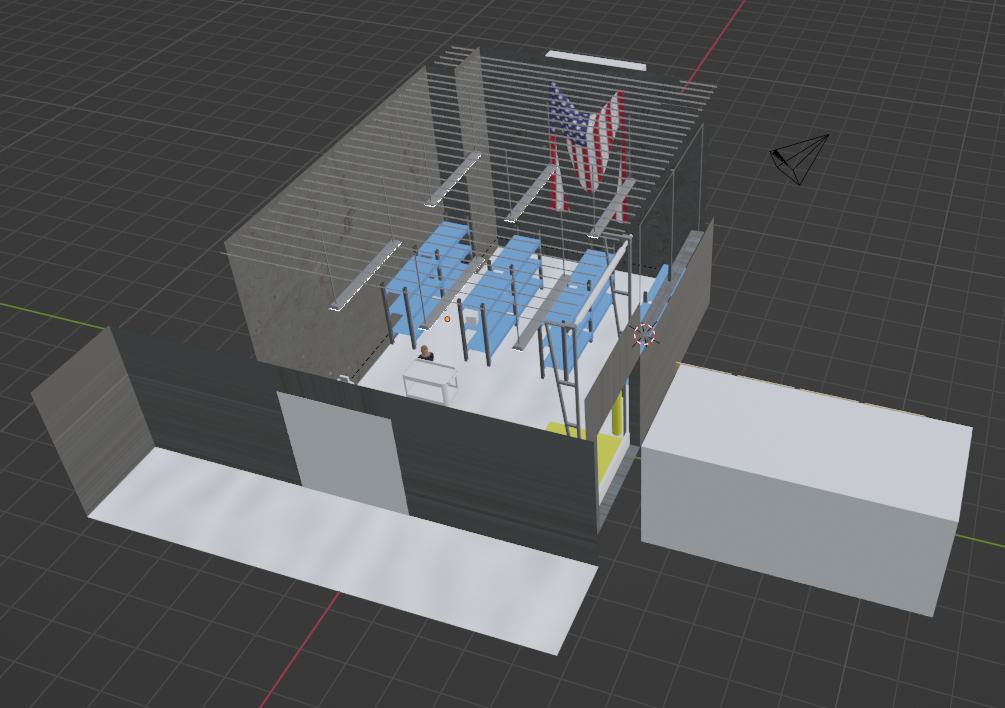

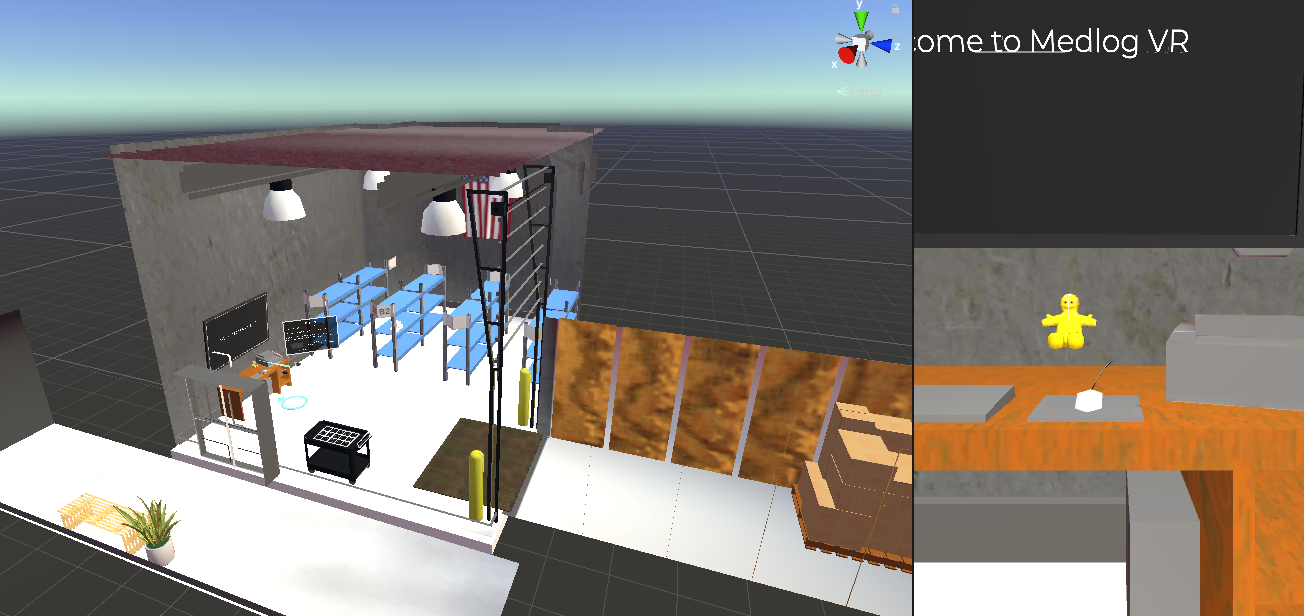

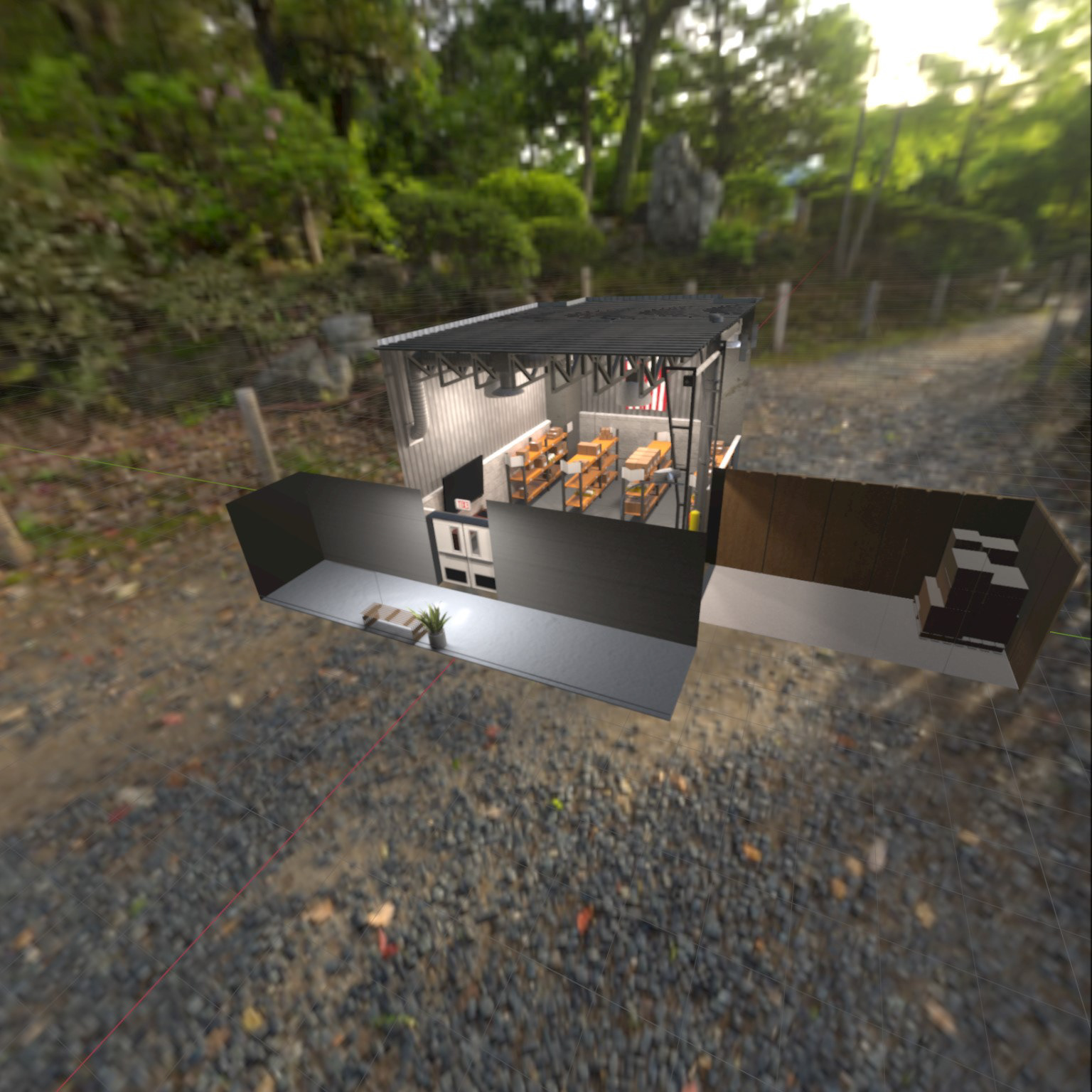

V1 set up in Unity

I had the first demo ready in a couple of weeks. After having friends and coworkers playtest it, I dove back in to fix bugs and complete the experience.

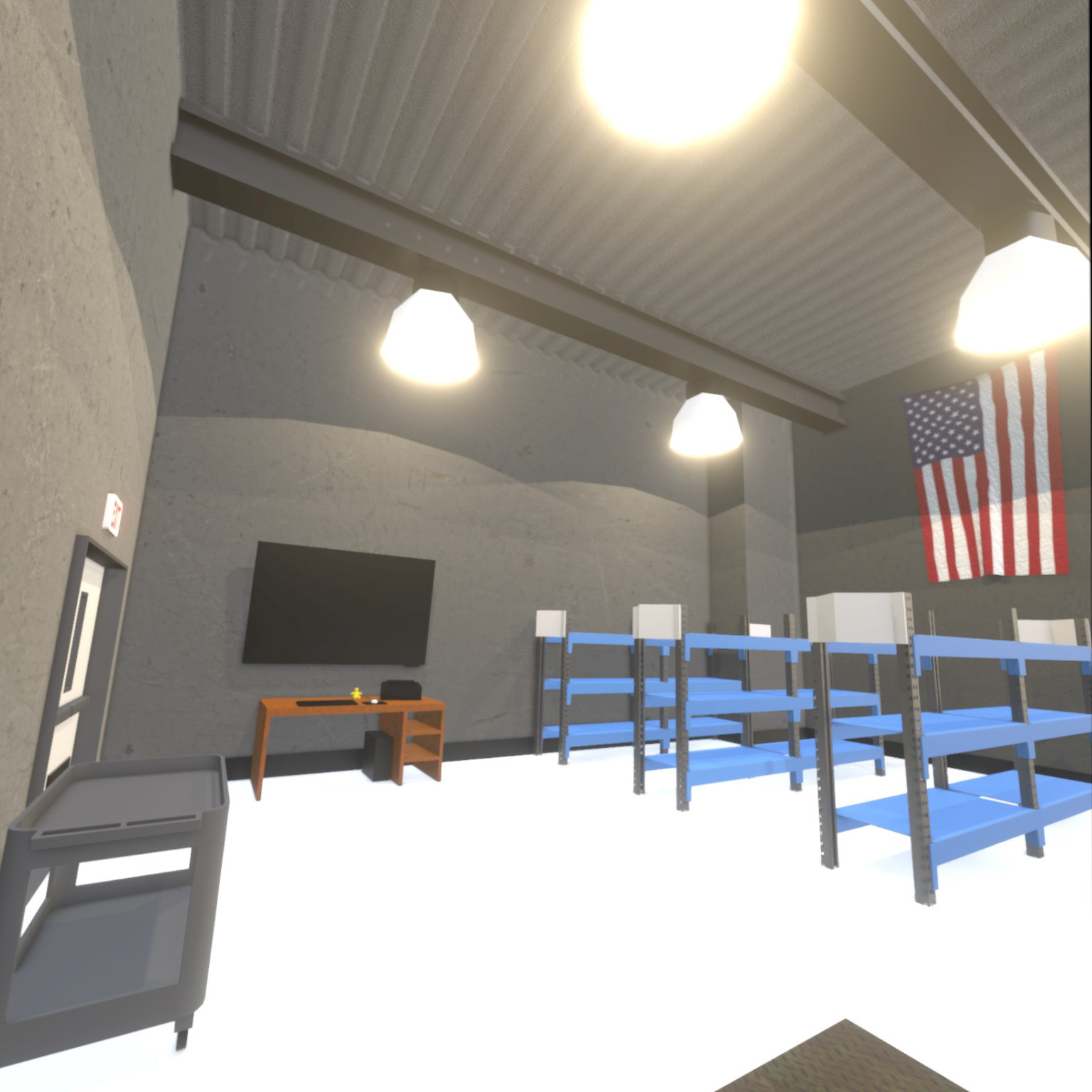

I wasn't happy with the lighting and overall polish of the scene. I added more details and baked out the textures with raytraced lighting. The lighting and improved textures were a massive boost to the experience; it added a lot to the feeling of presence and realism of the environment.

I wrote some scripts to handle different kinds of tutorial steps, then grouped those under a "Step Manager" object. The two primary steps are UI Steps and Trigger Steps, which are each modifications on an abstract Step class.

For the second version, I also cleaned up the UI. A new font, colors and rounding the corners on panels helped it feel more polished.

Another couple of weeks later, the new experience was ready to demonstrate to executives.

Another couple of weeks later, the new experience was ready to demonstrate to executives.

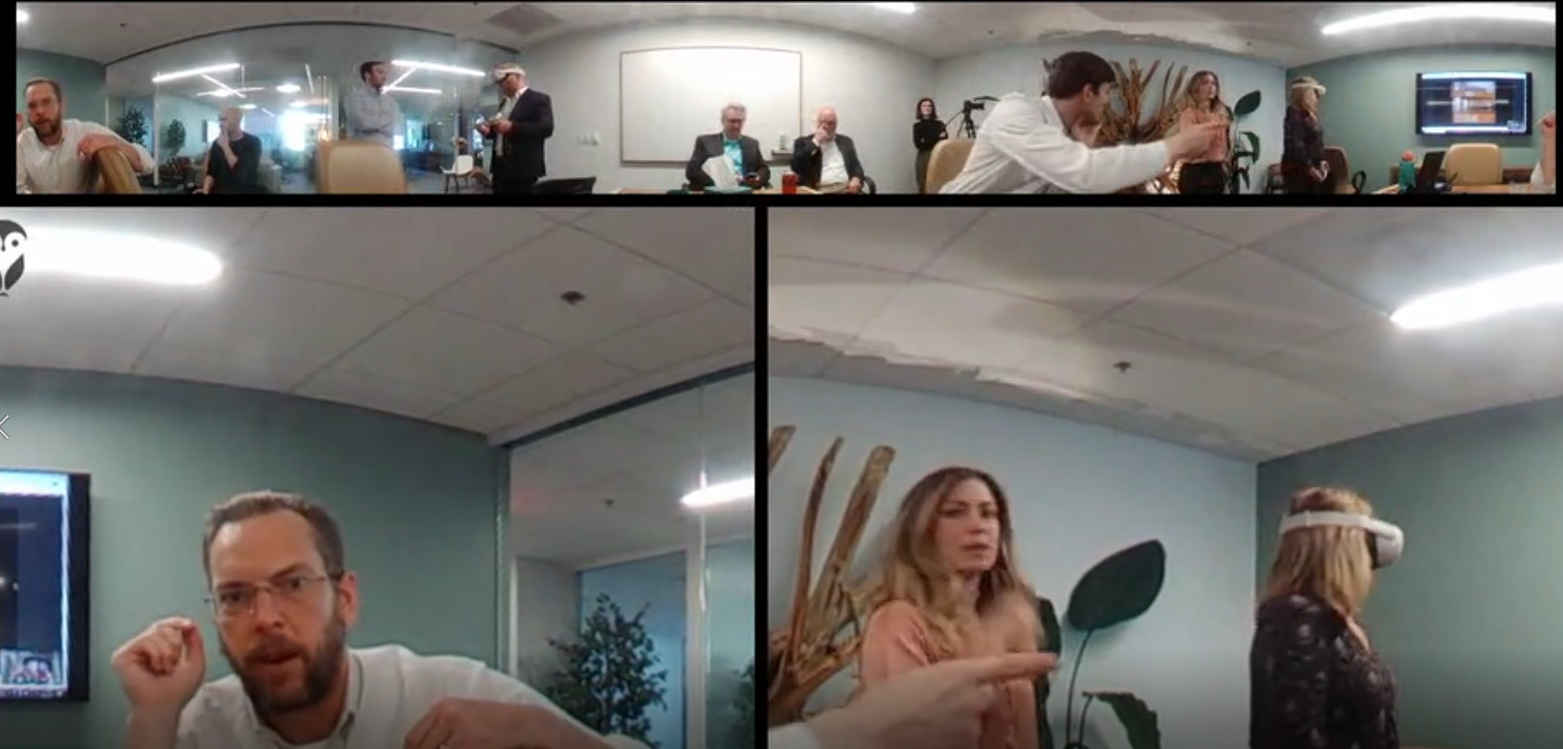

results

The demo was a blast; it was great to see so many people try the experience ( for some it was their first experience in VR ) and the flurry of new ideas that followed. I'm looking forward to future projects and expanding our capabilities in VR training!